Whitepaper

Women in Media Gender Scorecard 2022

From major newspapers, to television, to radio, the Australian media is not a level playing field, with women still underrepresented.

Mass AI-generated content has overwhelmed our social feeds, and that has set a sense of panic among PR leaders. Brand communications were tricky earlier, but this has been exasperated even further by AI content. Audiences have been left confused, whether it be not being able to differentiate between real and fake personalities online or fake statements being circulated to mislead audiences. This has kept leadership wondering how they can cut through and impact their target audiences who are, at the moment, overstimulated with AI-driven content.

We analysed 30M data points between 1st January 2025 – 12th August 2025 globally on social and mainstream media like X, Forums, Online news, LinkedIn, TikTok, Instagram and Facebook. We looked at real world cases of how audiences have been misled to believe something is real, and how this started a domino effect on the content being consumed by the world today.

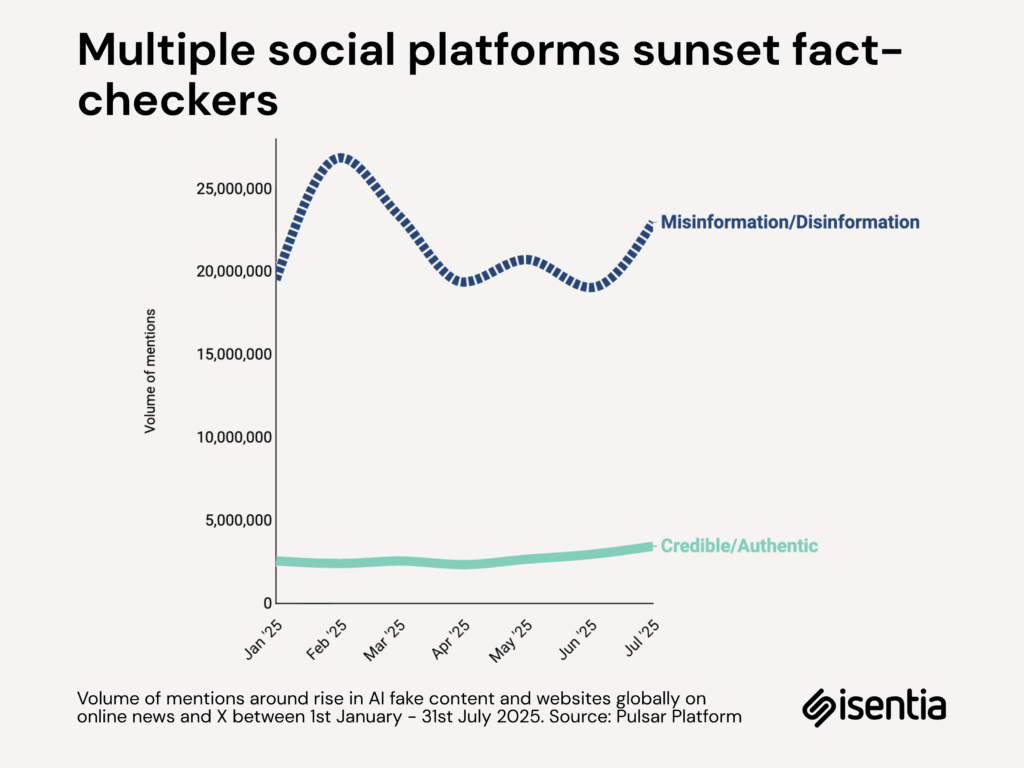

Synthetic media has taken over the internet and audience feeds have been flooded with unrelated and unreliable AI content. What’s important to note is that, with mass AI content, unreliability can lead to getting into a dangerous spiral of consuming nonsensical content that does not benefit anybody. As a result, audiences have started to defend the content they see by wanting to only subscribe to what conforms with their expectations. With a decline in the number of fact-checkers, misinformation and disinformation have become rampant.

A real word case was when in the US a squirrel named Peanut “P-Nut” was given euthanasia for being illegally kept by a US citizen, a fake statement by President Donald Trump, in disagreement with the euthanasia by the authorities, was widely circulated on X. Audiences sympathised with this statement and stood by Trump, going as far as gathering support to make him the next President. This was a snowball effect, and the reason this happened was because the audiences had a public figure support what they were already thinking and Trump’s statement conformed with their expectations. Although the statement did not have any AI-involvement, it has become a case in point to understand how audiences perceive AI content. If they like what they see, whether it is human or AI, not a second is spared to confirm its authenticity.

Mia Zelu, a virtual influencer, grew popular during this year’s Wimbledon. On her Instagram, she had uploaded a photo carousel that looked like she was physically there at the All England Club enjoying a drink. At first, she seemed real, but the media and audiences quickly questioned it. She posted the images in July of this year during the tournament with a caption, but was quick to disable her comment section adding to the mystery and debate. There was a lot of online backlash with audiences clearly frustrated with how easily deceiving this could be. Despite the backlash, her follower count grew and her account now has about 168k followers.

The conversations around AI influencers is only just beginning and raises serious questions on authenticity, digital consumption and how AI personas can truly affect audience perceptions – without truly existing.

According to the AI Marketing Benchmark Report 2024, the trust deficit directly impacts brand communications strategies, as 36.7% of marketers worry about the authenticity of AI-driven content, while 71% of consumers admit they struggle to trust what they see or hear because of AI.

Audiences are not rejecting AI outright, but the opacity around it could be dangerous, making their confidence in AI as a tool shaky. This is where PR leaders need to make authentic communication a necessity and not just a “nice-to-have”. In times where audiences are doubting whether a message was written by a human or a machine, the value of genuine and sincere human-driven storytelling rises.

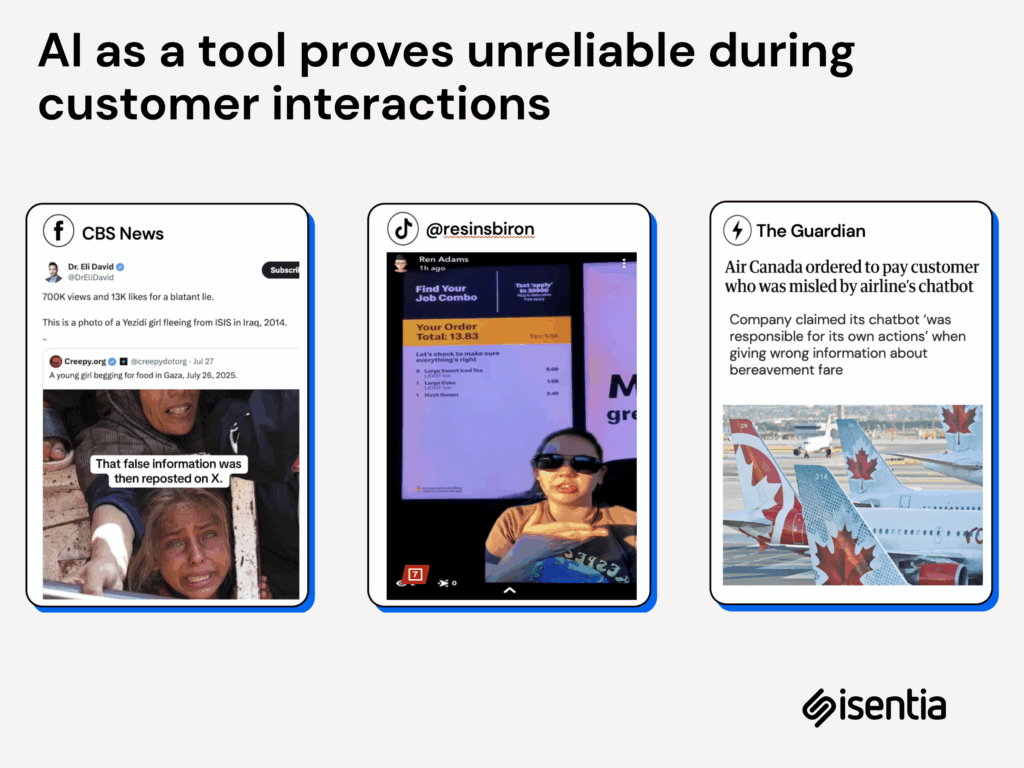

Scepticism towards AI doesn’t just come from high-profile controversies. It shows up in small, everyday moments that frustrate audiences and remind them how fragile trust can be.

The November 2024 Coca-Cola holiday campaign controversy exemplifies how quickly AI-generated content can trigger consumer backlash. When Coca-Cola used AI to create three holiday commercials, the response was overwhelmingly negative, with both consumers and creative professionals condemning the company’s decision not to employ human artists. Despite Coca-Cola’s defense that they remain dedicated in creating work that involves both human creativity and technology, the incident highlighted how AI usage in creative content can be perceived as a betrayal of brand authenticity, particularly devastating for a company whose holiday campaigns have historically celebrated human connection and nostalgia.

This kind of response to a multinational company really sets the record straight around what audiences expect to consume. PR leaders and marketers need to tread carefully when creating content, making sure there’s no over-dependence on AI and that is obvious for anyone to point out there is no human creativity. Authenticity is in crisis only when we let go of our control around AI. This mandates a need for more fact-checkers and more audits around brands and leadership.

Interested in learning how Isentia can help? Fill in your details below to access the full Authenticity Report 2025 that uncovers cues for measuring brand and stakeholder authenticity.

From major newspapers, to television, to radio, the Australian media is not a level playing field, with women still underrepresented.

The Royal Flying Doctor Service relies on Isentia’s suite of media intelligence solutions to inform their decisions and monitor their reputation across multiple geographies.

Telekung Siti Khadijah (TSK), engaged Isentia to help them better understand their audience, stand out from counterfeit products, communicate their brand value and differentiate themselves from competition from both established and counterfeit players.

Get in touch or request a demo.